In the ever-evolving world of AI music artists, a new rhythm is emerging. These innovative creators are not just transforming how music is made; they're reshaping the industry's landscape.

Let's dive into this melodious journey and discover how AI is harmonizing with human creativity.

The Role of AI in the Modern Music Industry

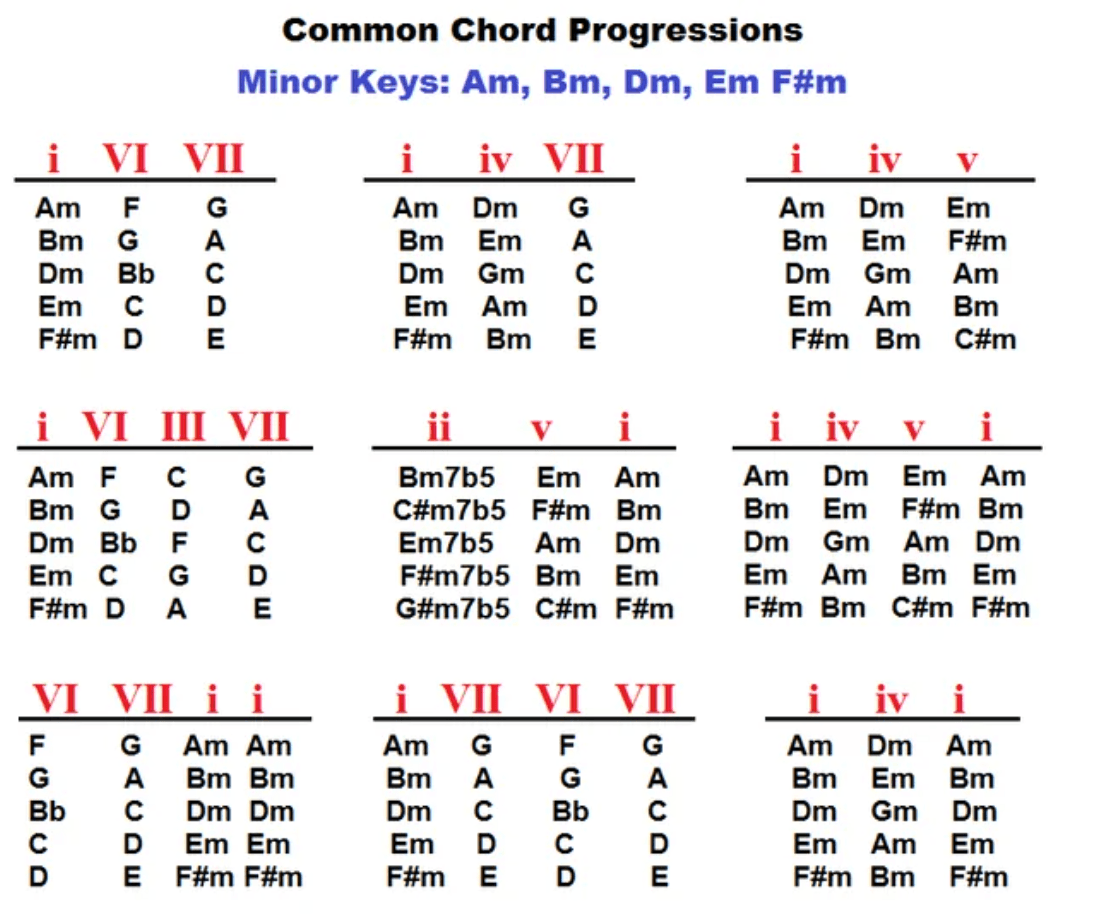

The integration of AI in the music industry signifies a major shift in how music is created and produced. AI music tools, through their sophisticated algorithms, are instrumental in streamlining various aspects of music production.

They assist in generating chord progressions, crafting melodies, and even in mixing and mastering tracks.

Notably, these tools offer a new dimension of creativity, allowing musicians and producers to experiment with unique compositions and sounds that were previously unattainable or highly labor-intensive.

AI in Composition and Songwriting

In the realm of composition, AI has reached a point where it can generate, compose, and enhance musical content that was traditionally performed by humans.

This technology takes various forms, from generating an entire song to writing specific aspects of a composition.

For instance, AIVA, a compositional AI program, has been trained with over 30,000 iconic scores, allowing users to generate music in various styles with options for key signature, pacing, and instrumentation.

Enhancing the Production Process

AI also plays a crucial role in the production process. Tools like LANDR’s AI mastering tool analyze tracks and offer selections for style and loudness, automating the mastering process.

This kind of technology has already mastered more than 20 million tracks, showing its widespread adoption and effectiveness.

AI's Impact on the Music Listening Experience

AI and machine learning (ML) are also transforming how we interact with music online. Platforms like YouTube, Spotify, Apple Music, and Pandora use AI to enhance the user experience, offering personalized track recommendations, eliminating dead air, and adjusting volume in real-time.

This level of personalization has dramatically changed how listeners discover and enjoy music, making the experience more tailored to individual tastes.

The Future Landscape of Music and AI

The future implications of AI in music are both exciting and complex. The technology is expected to reshape how music is performed and listened to.

We are likely to see fundamental changes in music-making instruments, Digital Audio Workstations (DAWs), and production tools.

AI will automate essential mixing and production skills, enabling virtual DJs to master mixing and track selection.

Moreover, the rise of deepfakes and AI-generated content could lead to new forms of collaboration and raise unprecedented legal and ethical questions, altering our perception of authenticity in music.

Also: Read more about Demystifying AI Music Tools: Embracing the Future of Sound

Spotlight: Rising Stars in the AI Music Scene

In the dynamic world of music, AI music artists are emerging as innovative forces.

This spotlight focuses on these groundbreaking artists and projects, highlighting how they are transforming the music industry with technology.

1. Jukebox by OpenAI

OpenAI's Jukebox represents a significant advancement in the field of AI-generated music. As a neural network, Jukebox is capable of generating music, including basic singing, across a variety of genres and artist styles.

It operates by receiving inputs such as genre, artist, and lyrics, and outputs a completely new music sample produced from scratch. This ability showcases a remarkable leap in AI's capacity to generate complex and diverse musical pieces.

Jukebox's development was motivated by the limitations of symbolic music generators that could not capture the nuances of human voices or the subtle dynamics essential to music.

To overcome these limitations, Jukebox models music directly as raw audio, a challenging task given the extremely long sequences involved.

The technology employed includes a method to compress raw audio into a lower-dimensional space, preserving essential musical elements while discarding some perceptually irrelevant information. This approach allows the model to tackle the high diversity and long-range structure inherent in raw audio music.

The model was trained on a curated dataset of 1.2 million songs, complete with corresponding lyrics and metadata, and uses a variety of techniques to ensure the alignment and coherence of the generated music.

Despite its advancements, Jukebox still has limitations, such as a noticeable gap between its creations and human-generated music, and challenges in reproducing larger musical structures like choruses.

2. Holly Herndon and Spawn

Holly Herndon is an influential figure in the realm of AI music, particularly noted for her innovative use of artificial intelligence in her compositions.

Her 2019 album, "Proto," is a collaborative work involving Herndon, Mat Dryhurst, and programmer Jules LaPlace, and features a singing AI named Spawn.

Spawn is an artificial neural network trained to recognize and replicate human voices, and it learned to create original music by processing audio files, predominantly featuring Herndon's own singing voice. Herndon and her team also trained Spawn to learn diverse vocal types by exposing it to the singing voices of others.

The development of Spawn included "training ceremonies," which were live performances where participants sang to Spawn.

In 2021, Herndon and Dryhurst developed Holly+, an initiative that addresses concerns associated with deepfakes and distributed identity play.

Holly+ is a method to decentralize Herndon’s own identity, allowing the community to determine whether new media created with her voice should be co-sold in collaboration with her.

This system enables the public to upload polyphonic tracks to a website, which are then reinterpreted and performed by a deepfaked version of Herndon’s voice.

Holly+ was showcased in various formats, including a real-time version at the Sonar Festival and a cover version of Dolly Parton’s "Jolene".

3. Yacht's AI System: 'Chain Tripping'

Yacht, an indie rock band also known as Young Americans Challenging High Technology, embraced AI for their album 'Chain Tripping'. They fed their entire back catalog - 82 songs spanning 17 years - into AI machines that analyzed their lyrics and melodies to write original songs.

This collaborative process resulted in a 10-song album that showcases a unique integration of AI in the creative process. Yacht's approach to this album was distinctive; they worked with LA artist Ross Goodwin to create a lyric-generating algorithm.

The algorithm was trained on a vast collection of words, not just from their music but also from their favorite bands and music they grew up listening to.

In producing 'Chain Tripping', Yacht imposed strict rules on themselves, deciding to use only the material generated by the AI without adding anything new.

This subtractive process meant they could remove elements but not add or harmonize, preserving the AI's original output in its purest form.

4. Dadabots: AI-Generated Death Metal

Dadabots, created by CJ Carr and Zack Zukowski, is a project focused on algorithmically-generated music. The duo developed a recurrent neural network capable of producing original compositions after being trained on specific datasets from singular musical genres.

Their early experiments spanned various genres, but they found that metal and punk were particularly suited to the algorithm’s erratic and random nature.

They noted that these genres worked better with the AI due to the aesthetically pleasing strange artifacts of neural synthesis like noise and chaotic voice mutations.

The end result, Dadabots, has produced ten albums inspired by artists such as Dillinger Escape Plan, Meshuggah, and NOFX. Not only did they create music, but algorithms were also developed for designing album art and generating song titles.

The most recent Dadabots project is 'Relentless Doppelganger', a non-stop live stream on YouTube. For this project, Dadabots was trained on the music of the Canadian band Archspire.

The system showed a better response to Archspire's fast, technical metal than any other music it had processed before.

The live stream is a continuous flow of intense death metal, creating an impressive simulacrum of the genre with distorted vocals, muddy guitar thrashing, and sharp tempo changes

5. Authentic Artists

Founded in 2021, Authentic Artists stands as a pioneering force in the intersection of AI and music.

This company distinguishes itself through the creation of diverse virtual characters, including fantastical figures like dragons and cyborg humans.

These characters are not mere digital avatars but complex, AI-driven personalities with unique styles and backstories, illustrating Authentic Artists' commitment to blending imaginative storytelling with music. At the heart of their innovation is their creative AI, which is designed to evolve and mimic human creativity.

This AI is capable of generating new musical compositions across various genres, pushing the boundaries of digital music production.

Their work represents a fusion of technology and music, exploring new territories in music creation and consumption in the digital age.

6. Auxuman and Yona

Auxuman, co-founded by musician Ash Koosha, is another notable entity in the AI music landscape. With the introduction of Auxuman (short for "Auxiliary Human"), the company aims to revolutionize the music industry through virtual artists.

One of their most prominent creations is Yona, an AI-driven avatar artist with a distinct personality and style. Yona is designed to interact with audiences in a way that challenges the traditional boundaries between human and machine creativity.

However, Auxuman's vision extends far beyond Yona. In 2019, they aspired to create a diverse group of virtual artists, each with its own identity and musical style.

This ambition underscores the potential of AI not just in creating a single virtual musician but an entire ecosystem of AI-driven music personalities. Auxuman and Yona symbolize the evolving landscape of music, where AI becomes a central player, offering new avenues for artistic expression and reshaping how audiences experience music.

7. AI-Completed Symphonies

This represent a fascinating intersection of historical musicology and modern technology. Projects like the completion of Beethoven's 10th and Mahler's Tenth Symphony have garnered significant attention.

In these endeavors, AI has been employed to reconstruct and complete symphonies that were left unfinished by these legendary composers.

This task involves a complex process where AI algorithms analyze the existing portions of the symphonies, learning the composers' styles, structures, and compositional techniques.

Utilizing this knowledge, the AI suggests methods to finalize the symphonies, ensuring alignment with the original composer's vision and stylistic nuances.

These endeavors transcend mere musical creation; they are about safeguarding and enhancing the heritage of legendary musicians. This is achieved by merging their classic works with the capabilities of modern technology.

8. Collaborative Compositions

The intersection between human artists and AI has produced some astonishing results. Notably such as the one between violinist Hilary Hahn and composer David Lang with AI, showcase the merging of human and machine creativity in a very tangible form.

In these projects, AI is not merely a tool but a collaborative partner in the compositional process.

The AI algorithms analyze and learn from the inputs of the human artists, then contribute their own ideas and patterns to the composition. This collaboration leads to the creation of unique pieces of music that are a product of both human emotion and machine intelligence.

These compositions often reveal new possibilities and explorations in music that might not have been possible through human efforts alone. Such collaborations are reshaping the landscape of musical composition, demonstrating how AI can augment and enhance human creativity in the arts.

Pros and Cons: A Harmonious Balance

While AI in music heralds exciting possibilities, it's crucial to weigh the benefits and challenges.

AI tools offer unparalleled efficiency and innovation, yet they cannot replace the soul and intuition of a human artist.

They serve as tools, not replacements.

Pros of AI in Music

1. Democratization and Enhanced Productivity

AI in music serves as a powerful tool for democratizing music production. By providing accessible platforms, AI enables a wider range of artists to produce and experiment with music, regardless of their technical skill level or access to traditional resources.

This democratization not only broadens the pool of music creators but also enhances the overall productivity of musicians.

The ability of AI to quickly generate new ideas and variations is particularly beneficial, as coming up with fresh musical concepts can be a time-consuming and sometimes frustrating process for artists.

2. Innovation and Creative Expansion

AI's capability to analyze vast amounts of data and learn from existing musical compositions allows for significant innovation in music creation.

This technology can generate original compositions that mimic specific artists or genres, opening new avenues for musical exploration and genre-blending. Such innovation not only extends the boundaries of traditional music-making but also introduces novel sounds and styles that were previously unattainable.

Cons of AI in Music

1. Ethical and Social Challenges

The use of AI in music raises ethical and social questions. Concerns about the ownership of AI-generated music and the potential for bias and stereotypes in the algorithms are at the forefront.

The legal complexities surrounding the rights to AI-created compositions pose significant challenges, as do the implications for human creativity and expression in an increasingly AI-dominated field.

2. Authenticity and Emotional Depth

A crucial challenge of AI in music is the perceived lack of emotion and authenticity in AI-generated compositions. While AI can mimic styles and produce technically proficient music, there's an ongoing debate about whether it can replicate the soul and intuition inherent in human-created music.

This lack of emotional depth can impact the listener's connection to the music, potentially affecting the overall reception and value of AI-generated compositions.

Impact on the Music Industry

The rise of AI-generated music also poses challenges for the music industry's creator economy.

The surge in AI-made songs on platforms like Spotify raises concerns about copyright and authenticity.

Additionally, AI-generated music can disrupt traditional music recommendation algorithms, affecting how users discover and interact with music.

These disruptions necessitate a reevaluation of how music is created, distributed, and consumed in the AI era

The Crescendo of AI in Music

The symphony of AI music artists is just beginning. As technology advances, the methods of creating, experiencing, and enjoying music are also transforming. Empress offers you an opportunity to join this thrilling journey, elevating your creative potential to unprecedented levels.

Embrace the future of music with Empress – where your vision meets our innovation.

FAQs: AI Music Artists

Q1: How do AI music artists influence the creative process?

AI music artists introduce a level of computational creativity, offering new perspectives and ideas that can inspire human artists and expand the realm of musical possibilities.

Q2: What makes Empress unique in the realm of AI music tools?

Empress stands out for its advanced algorithmic capabilities, enabling nuanced and sophisticated music composition, often with a focus on generating emotionally resonant and complex musical pieces.

Q3: Can beginners use AI tools for audio production?

Yes, beginners can use AI tools for audio production, as many of these tools are designed with user-friendly interfaces and automated features that simplify the music creation process.

Q4: How do AI artists impact the music industry?

AI artists impact the music industry by diversifying the types of music being created, influencing music production techniques, and challenging traditional notions of authorship and creativity.

Q5: What future developments can we expect in AI-driven music?

Future developments in AI-driven music may include more advanced emotional intelligence in compositions, greater collaboration between AI and human artists, and innovative applications in live performances and personalized music experiences.

Follow the future of music with Empress. Check out our blog to learn how you can effectively use these AI music tools.